...

- inside graphical interactive job using

pro-vizservice (Main documentation - polish - Obliczenia w trybie graficznym: pro-viz) - in SLURM batch job though SLURM script submitted from command line

- in SLURM batch job submitted from Relion GUI started via

pro-vizservice in dedicated partition

Interactive Relion job with Relion GUI

| Anchor | ||||

|---|---|---|---|---|

|

In order to start interactive Relion job with access to Relion GUI

Log into Prometheus login node

Code Block language bash title Log into Prometheus login node ssh <login>@pro.cyfronet.pl

Load

pro-vizmoduleCode Block language bash title Load pro-viz module module load tools/pro-viz

- Start

pro-vizjobSubmit

pro-vizjob to qeuueCPU-only job

Code Block language bash title Submission of CPU pro-viz job pro-viz start -N <number-of-nodes> -P <cores-per-node> -p <partition/queue> -t <maximal-time> -m <memory>

GPU job

Code Block language bash title Submission of GPU pro-viz job pro-viz start -N <number-of-nodes> -P <cores-per-node> -g <number-of-gpus-per-node> -p <partition/queue> -t <maximal-time> -m <memory>

- Check status of submitted job

Code Block language bash title Status of pro-viz job(s) pro-viz list

Get password to

pro-vizsession (when job is already running)\Code Block language bash title Pro-viz job password pro-viz password <JobID>

exemple output

Code Block language bash title Pro-viz password example output Web Access link: https://viz.pro.cyfronet.pl/go?c=<hash>&token=<token> link is valid until: Sun Nov 14 02:04:02 CET 2021 session password (for external client): <password> full commandline (for external client): vncviewer -SecurityTypes=VNC,UnixLogin,None -via <username>@pro.cyfronet.pl -password=<password> <worker-node>:<display>

- Connect to graphical

pro-vizsession- you could use weblink obtained in previous point

- you could use VNC client (i.e. TurboVNC). Configuration of client described in Obliczenia w trybie graficznym: pro-viz (in polish)

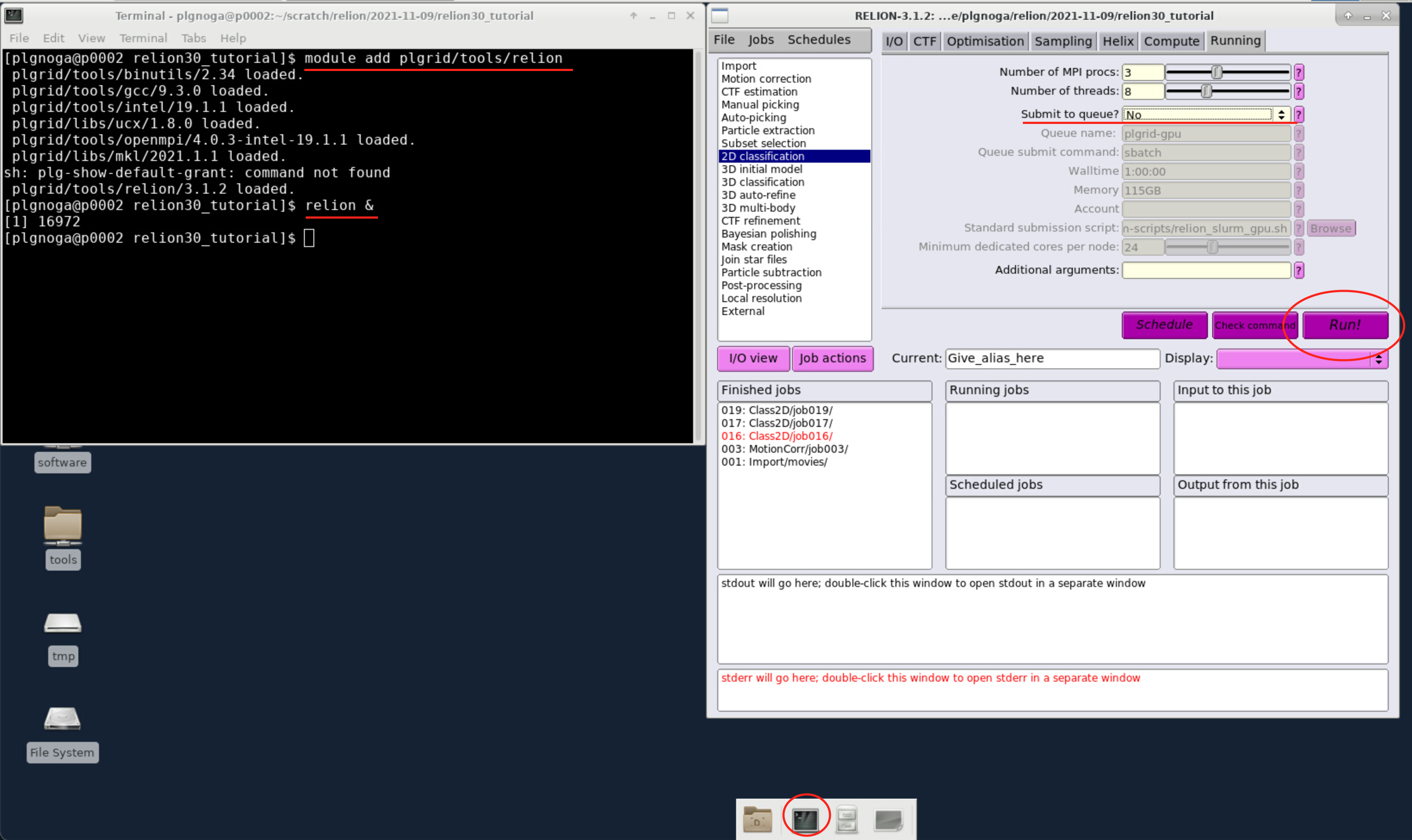

- Setup Relion environment

When connected to GUI open Terminal and load Relion module

Code Block language bash title Load Relion module module load plgrid/tools/relion

Start Relion GUI in background

Code Block language bash title Start relion relion &

- Use Relion GUI for computation.

...

After finishing work terminate job

Code Block language bash title Pro-viz job password pro-viz stop <JobID>

Relion in SLURM batch jobs

...

Log into Prometheus login node

Code Block language bash title Log into Prometheus login node ssh <login>@pro.cyfronet.pl

Move to Relion project directory

Code Block language bash title Change directories cd $SCRATCH/<relion-project>

Info title Usage of filesystems Relion project during computations should be stored in $SCRATCH filesystem on Prometheus. More info - https://kdm.cyfronet.pl/portal/Prometheus:Basics#Disk_storage. For longer storage user should use $PLG_GROUPS_STORAGE/<team_name> filesystem.

Submit job

Code Block language bash title Job submision sbatch script.slurm

Example CPU-only SLURM script

Code Block language bash title Relion CPU-only SLURM script #!/bin/bash # Number of allocated nodes #SBATCH --nodes=1 # Number of MPI processes per node #SBATCH --ntasks-per-node=4 # Number of threads per MPI process #SBATCH --cpus-per-task=6 # Partition #SBATCH --partition=plgrid # Requested maximal walltime #SBATCH --time=0-1 # Requested memory per node #SBATCH --mem=110GB # Computational grant #SBATCH --account=<name-of-grant> export RELION_SCRATCH_DIR=$SCRATCHDIR module load plgrid/tools/relion/3.1.2 mpirun <relion-command>

Example GPU SLURM script

Code Block language bash title Relion GPU SLURM script #!/bin/bash # Number of allocated nodes #SBATCH --nodes=1 # Number of MPI processes per node #SBATCH --ntasks-per-node=4 # Number of threads per MPI process #SBATCH --cpus-per-task=6 # Partition #SBATCH --partition=plgrid-gpu # Number of GPUs per node #SBATCH --gres=gpu:2 # Requested maximal walltime #SBATCH --time=0-1 # Requested memory per node #SBATCH --mem=110GB # Computational grant #SBATCH --account=<name-of-grant> export RELION_SCRATCH_DIR=$SCRATCHDIR module load plgrid/tools/relion/3.1.2 mpirun <relion-command> --gpu $CUDA_VISIBLE_DEIVCES

Info title GPUs usage GPUs are available only for selected grants in partitions

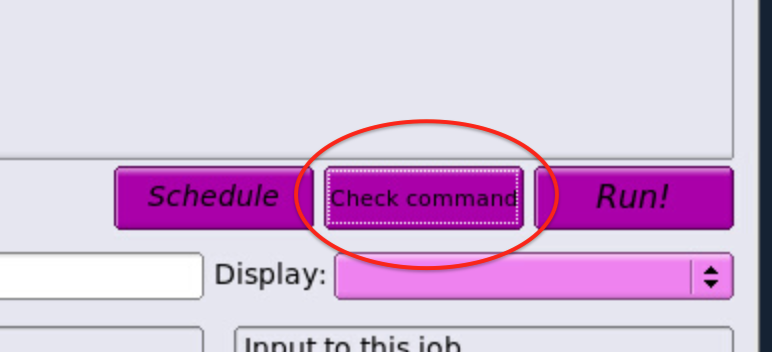

plgrid-gpuandplgrid-gpu-v100. One should aways use--gpu $CUDA_VISIBLE_DEIVCESto request GPUs allocated for job.Info title Relion command Relion command syntax could be checked using GUI and copied to script

Check job status

Code Block language bash title Job submision squeueor

Code Block language bash title Job submision pro-jobs

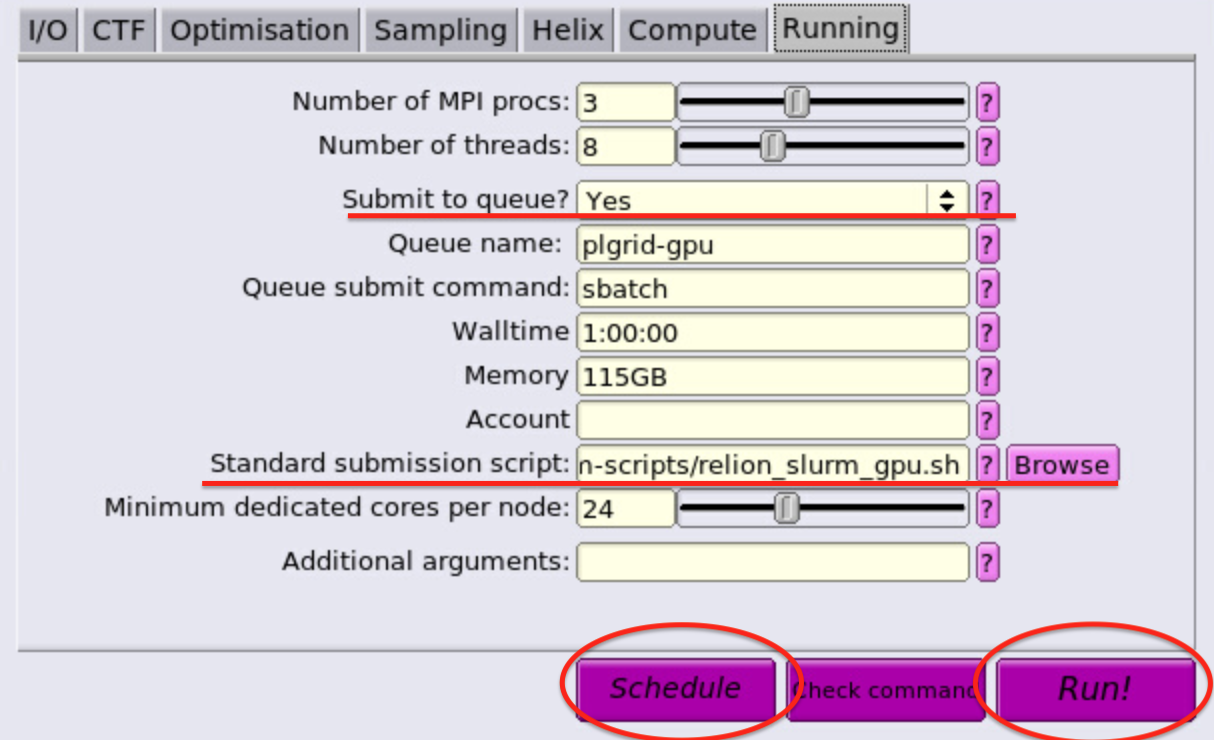

Submitting SLURM jobs from Relion GUI

- Start job as in pro-viz session but using

plgrid-servicespartition/queue. - In Relion GUI use "Submit to queue" in "Running" tab

- Select submission scripts from directory

- Select submission scripts from directory

- Monitor jobs either from Relion GUI or command line using

squeueorpro-jobscommands